Artificial intelligence (AI) is rapidly moving from experiments in the lab to real use in hospitals, research centers, and pharmaceutical companies, with over $30 billion invested in healthcare AI companies in the last three years. In drug development, it is helping scientists analyze trial data and scan vast safety databases. In healthcare delivery, it is supporting clinicians with documentation, triage and decision support. And across both domains, it is increasingly being used to generate insights from real-world data—electronic health records, claims data, registries—that can complement traditional clinical research.

The potential is transformative. But in these high-stakes environments, failure is not an option. Patients expect safe and effective care, regulators demand accountability and clinicians need confidence that AI systems will not slow them down or put them at risk. This is where defensible AI comes in: systems that perform reliably, can be trusted by those who use them and can stand up to scrutiny when questions inevitably arise.

Why defensibility matters

AI is only transformative if people are willing to use it. That willingness depends on trust. A physician will not rely on an AI-generated summary of a patient record unless it is accurate, understandable and consistent with clinical workflows. A regulator will not accept an AI-enabled trial endpoint unless the methods are transparent and validated. A life sciences team will not scale an AI solution if they cannot defend it to internal reviewers, compliance officers and external partners.

Defensible AI bridges these expectations. It is not simply a matter of compliance, nor is it only about technical accuracy. It is about embedding confidence—among doctors, scientists, regulators and patients—that the AI application is effective, reliable and aligned with their goals. In this sense, defensibility is as much about strategy as it is about governance. Organizations that treat it as a strategic priority gain not only regulatory readiness but also adoption and long-term impact.

Barriers along the way

Of course, getting there is not easy. Healthcare and life sciences organizations face challenges that are both technical and cultural. Real-world data may be incomplete, inconsistent or biased, and without careful handling, it can skew results. Models that perform well in testing may prove fragile once deployed, degrading over time or producing outputs that are difficult to explain. Clinicians may resist using AI that adds steps to their workflow, while regulators must navigate a patchwork of evolving standards across geographies.

None of these barriers is insurmountable. In fact, they are precisely why defensible AI is necessary. Governance provides the scaffolding to address data quality, document model assumptions and create oversight processes that evolve alongside regulations. But governance alone is not enough. Defensibility also requires strategy—knowing where to start, how to scale and how to ensure AI delivers real value.

A lifecycle approach

The most effective way to think about defensible AI is as a journey across the lifecycle of both medicines and care. In early planning, it means aligning teams on principles of safety, transparency and clinical relevance. During data preparation, it means establishing provenance and fairness checks, especially when drawing on real-world data that was never collected with AI in mind. In model development, it requires rigorous validation and documentation so results can be reproduced and defended. And once deployed, it demands continuous monitoring to detect drift, maintain performance and respond to new regulatory expectations.

This lifecycle framing matters because it turns defensibility into something actionable. Rather than treating governance as a set of rules to follow, it becomes a living process that supports innovation while protecting patients and preserving trust.

Examples in practice

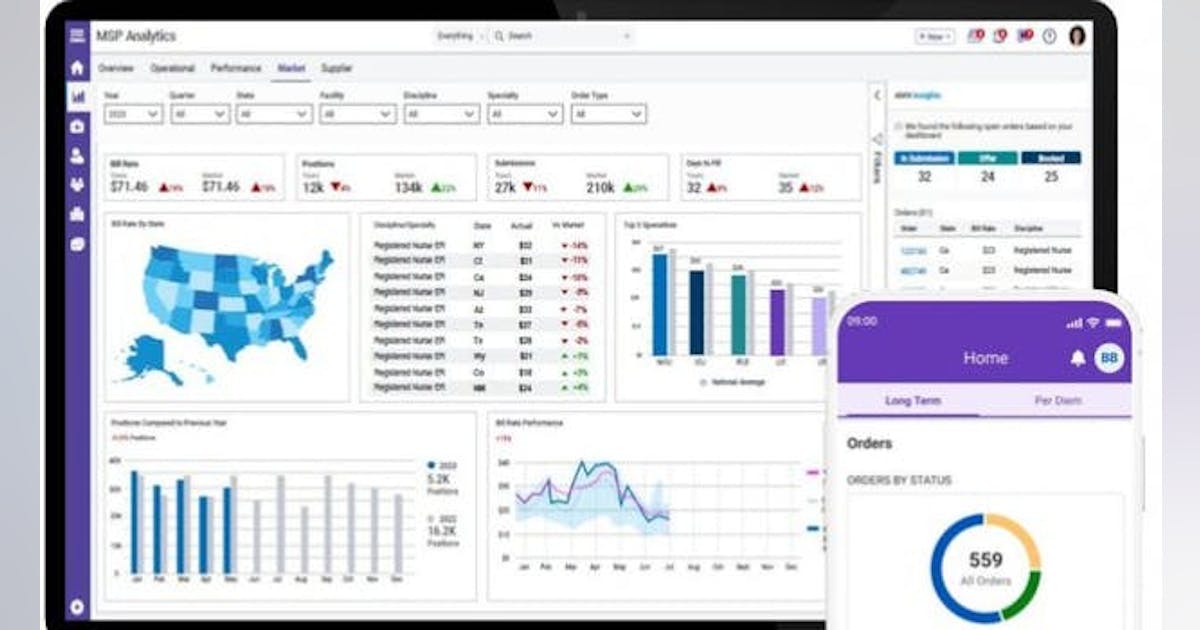

One clear area where defensibility matters is the use of real-world data (RWD) to generate real-world evidence (RWE). Regulators such as the Food and Drug Administration (FDA) and the European Medicines Agency (EMA) are already examining how RWE can support regulatory decisions, from safety monitoring to external control arms in clinical trials. But reproducibility studies have shown that many RWE findings are fragile if data definitions, analytic methods or documentation are incomplete. An evidence platform that standardizes data, embeds transparency and enforces clear governance can help ensure RWE is defensible.

Infrastructure is another critical piece. Initiatives like the DARWIN EU network aim to create a pan-European system for analyzing real-world data in a way that regulators can trust. By harmonizing disparate sources under a common governance and data model, DARWIN EU shows how scale and defensibility go hand in hand. Similar efforts, such as the UK’s Optimum Patient Care Research Database (OPCRD) highlight the importance of building data assets that are both rich and reliable, with quality controls and privacy safeguards built in from the outset.

These examples illustrate how defensibility is already shaping the future of AI and evidence generation. It is not enough to have algorithms that work in isolation; they must operate within platforms and networks that provide transparency, reproducibility and governance. For healthcare providers, regulators and pharmaceutical companies alike, the lesson is the same: innovation lasts only when it is supported by infrastructures that make evidence credible and AI defensible.

Strategy at the core

What ties these stories together is strategy. Defensibility is not an afterthought layered on top of innovation. It is the way to ensure innovation sticks. For pharmaceutical companies, this means AI that accelerates discovery and development while meeting regulatory expectations. For healthcare providers, it means AI that reduces burden and supports better care without eroding trust. For both, it means making deliberate choices about where to start, how to measure success and how to build governance into every stage.

Organizations that treat defensible AI as strategy—not just compliance—gain a competitive advantage. They move faster with confidence, knowing that their innovations can be trusted, explained, and defended. And in a field as sensitive as health, that combination of performance, trust, and accountability is what separates lasting impact from fleeting hype.

From awareness to action

The landscape of AI regulation will continue to evolve. New rules will emerge, standards will shift and technologies will advance. But waiting for clarity is not a strategy. The path forward is to build defensibility into AI today—across planning, data, model development, deployment and monitoring—so that organizations are ready no matter what comes.

Defensible AI is not just safe AI. It is useful, trusted and strategic AI. It is AI that delivers results clinicians can adopt, regulators can accept and patients can believe in. And it is the foundation for health and life sciences innovation that lasts.

.jpg)

English (US) ·

English (US) ·